Not every company is lucky enough to have enough appsec or even infosec folks that a security staffer can threat model everything. You're on an engineering team who own some services and you've been told by security that you should threat model your system; now what?

Since obviously the folks who own the systems have the deepest knowledge of them, this can sometimes turn out awesome. But, for non-security-folk, threat modeling can also seem arcane or hard to approach.

tl;dr

You don't have to be a security person or a hacker to start to understand what could go wrong in a system.

I'm gonna walk through how to apply a couple of different methods to begin to understand your system from the attacker's perspective through threat modeling. I want to demo how threat modeling can help engineers and developers spot gaps in a system which could lead to failures in production.

I'll start in a mildly unusual place since it might not be that intuitive for folks without security background to just do the thing.

Take Five

I'm gonna try (ab|mis)using some ideas from a framework operational postmortems sometimes follow called the Five Whys to lead into other methods more typical for threat modeling.

We'll iteratively build from a series (four, seven, actually five...) of "why" questions which we'll

answer by describing the system and process up to objective and (in this

case it's an imaginary system, so we're making it all up as we go

along, but ideally...) factual statements about the people we think

might attack our system and how we might defend our systems, so that we

can defend intentionally.

This isn't a perfect application of the Five Whys, however. It's just a hack.

Three perspectives on threat

I view threat modeling as a structured way of looking at systems to determine what parts are most important to protect or refine from a security perspective to improve the system's operational viability.

Data-centric

The most typical style I know of threat modeling (STRIDE, etc here) could be thought of as data-centric. These methods seem to mainly involve drawing data flows and thinking through what data is most important to keep safe, then thinking about how it could be accessed in ways we don't want.

System-centric

Another way folks might somewhat less-intentionally threat model: simply understanding the system deeply and what it should / what it should not be able to do, then applying that knowledge to systems-level protections.

Attacker-centric

A third is based in trying to thoroughly understand the attacker and what they are most likely to want, then focusing on the parts of the system most interesting to the attacker to protect first.

Combined!

Not novel, but I'd like to apply techniques from all three of the categories I've defined so that we look at the system from multiple angles as well as from the attacker perspective to best understand what could go wrong.

Architecture

Threat modeling (in any form) should be an iterative exercise revisited whenever the system undergoes behaviour or structure-affecting changes. As the system evolves, the team's threat model should be updated with it. We'll play with two versions of the same system, with an eye toward trying to understand how our threat model changes as well when we add new features or refactor.

One

Here's a fairly vanilla client/server setup. The client directly calls some APIs backed by a variety of datastores.

Two

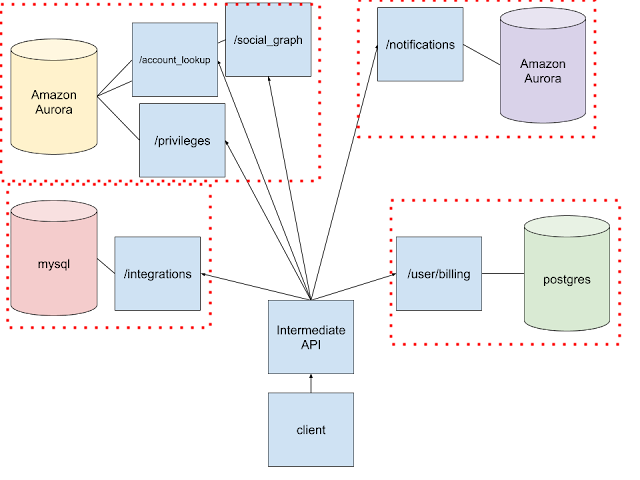

The second version of our system includes an intermediate API combining results from the other APIs behind it to provide the client a smaller and more tailored result.

Personae (non gratae)

We can build attacker personae sort of like we'd construct Agile-style customer personae during product development. We'll apply gathered facts clustered by similarity in the same way a product manager might gather info through customer interviews and other means, and group by various customer attributes.

In the real world, we could gather data to start from via:

- examining logs and scans for repeated anomalies ("x happens and so does y at the same time, and we don't know why" might be something we're interested in using as a starting point for a threat model)

- aggregating reports to our bug bounty or VDP by type of issue and attack

- getting to know the folks who frequently report to us through our BBP or VDP

- keeping up with attack writeups and related reporting

- our infosec teams' general knowledge

- history of prior incidents and how they were resolved

- pentest reports

- red team exercises

- ...

Here are our attackers, who I've named completely at random:

- Akash wants our user account data (maybe we got this pattern from a prior incident and a pentest report which exposed some of the same issues)

- Jenna is a bug bounty hunter looking for vulns on subdomains and APIs in scope for our BBP

- Frankie is automating account creation on our platform for a freelance job (maybe we got this pattern from our service logs and scans)

I also think it's important to call out that none of these folks are actively malicious, they're just applying their skills in ways the market and our systems allow.

Attacker detail: System 1

Akash wants active VIP accounts.

Why - there is a market (brokers, buyers) for high-profile user accounts

Why - $company has very quickly / recently become very popular and has a large user graph, but the maturity of $company's systems can't yet support the userbase entirely

Why - the systems crash often, the APIs are pretty open, and there isn't much concern for security or opswork

Why - quick-growth survival mode is different from long-term-stability-mode, and the engineering culture is currently very focused around the former rather than the latter

Jenna wants to find vulns on subdomains and APIs included in our bug bounty.

Why - we pay well and try to treat the folks who report to us kindly, even if they report findings we consider invalid

Why - it costs money, time, and sweat to build skills needed to provide findings we consider useful

Why - the market will provide Jenna other avenues for her skills if we don't

Why - the market values thoughtful and clear findings reports and bug bounty participants who treat the admins with respect

Frankie wants to make fake accounts.

Why - fake engagement if well leveraged can sometimes result in real engagement over time

Why - the public can't always tell fake engagement from real engagement if it's done well

Why - the clients will pay for a small network of unrelated-enough accounts plus some automation to glue them together

Why - the clients are burgeoning influencers who would like to become more well known and get better sponsorships

More system detail

Now that we have some semi-reasonably motivated attackers to think about, let's talk about what they might focus on in System #1. We'll add a little more detail to the APIs first.

We don't need to always apply all our attacker personae to sketch what might be interesting about the system every time we change the system, but we will this time. The more detail we have to start with, the more detail we can work with over time as needed.Jenna only cares about the /social_graph api, which is in scope for bug bounty.

Why - the other APIs have been scoped out of the bug bounty

Why - the other APIs are currently very fragile compared to /social_graph

Why - run-the-business work is currently prioritized over tech debt and operational hardening work

Why - the engineering culture is primarily focused around supporting fast growth versus long term stability (sound familiar?)

Akash is mainly interested in /account_lookup, /privileges, and /user/billing.

Why - through recon, a list of all the APIs and subdomains the company owns was identified, and then narrowed down to just what would be useful to build an appealing dataset

Why - the integrations and notifications APIs serve "additional info" useful for the client in presenting a nice UI, not account data directly

Why - the notion of account is still centralised around a few key APIs

Why - these additional APIs were added as the notion of "account" was slowly expanded out from a monolith into a series of microservices

Why - the refactoring was done ad hoc

Why - the process was not well defined beforehand in a fashion including incremental milestones with definite security and ops goals

Frankie is interested in all the APIs.

Why - one can bypass the client to build accounts how the system expects them to look, then use the account lookup API to check everything got set up correctly

(we'll split this thought into two directions...)

Part A

Why - the non-social_graph APIs don't rate limit client IDs other than the one hardcoded into the client

Why - folks want to be able to run load tests in production without the rate limiting on so they can observe actual capacity

Why - load testing to ensure a certain SLA can be met has been written into one of the enterprise client user contracts

Why - the backend had difficulty handling a denial-of-service attack six months ago because no rate limiting existed then

Why - system hardening tasks like adding rate limiting were put off when the system was built to meet an initial delivery deadline, and then backlogged

Part B

Why - the account lookup API returns every single account attribute as part of a nice clean JSON

Why - the lookup API specification doesn't match its current task well

Why - the lookup API was first built as part of a different feature and got reused in what is currently in production without retailoring to meet current customer needs

Why - (see last why of Part A as well) corners got cut to meet an initial delivery deadline

What have we learned?

- account lookup API could be (with security and performance justifications) refactored to match current use case

- we might want to consider rate limiting other client IDs than just the one for the official client

- we may not want to spend quite as much time on the integrations and notifications APIs in terms of security protections compared to the more closely account-related APIs, depending on what other information we discover as we go

Adding in the intermediate API

The second version of our system has an additional API layer which the client calls instead of calling the backing APIs directly.

This extra layer could be GraphQL, or maybe a WAF or other application doing some security checks, or... a thin REST API designed to combine the data from less tailored APIs so the client only has to make a single call and gets back just what it needs, instead of making a bunch of slower calls and having to discard data that isn't important to the user experience.

This API could be doing additional input sanitisation or other data preprocessing to clean input up (which the legacy APIs it fronts may not be able to include without heavy refactoring).

For now, it's just a thin, rate limited REST API designed to wrangle those internal APIs without any special security sauce. There's one main endpoint combining/reducing the backing APIs' data.

Let's forget about the previous Whys for a second and redo against the second iteration of our system.

Jenna is interested most in the intermediate API.

Why - the intermediate API has more low-hanging bugs compared to /social_graph, which has been in scope for the BBP since iteration #1 of the system was first released

Why - the intermediate API exposes some of the vulnerabilities of the more fragile APIs (not directly in BBP scope) backing it

Why - the intermediate API combines data from the fragile other APIs, and like rest of system doesn't rate limit client IDs other than the official web app

Why - the implications of only rate limiting the official client in order to not rate limit randomly-generated client IDs used for load testing were not thought through

Why - security and other operational hardening concerns are second priority to moving fast and getting things in prod

Frankie remains interested in the individual backing APIs.

Why - hitting the backing APIs directly using a non-rate-limited client ID is still most efficient compared to going through the client or intermediate API or both

Why - the intermediate API tailors its requests to what the client needs

Why - the main priority in designing and building the intermediate API was client performance

Why - users have been complaining about slow client response times

Why - the fake-account-making automation is quietly taking up a significant chunk of the backing API resources

Why - the backing APIs are overly permissive in terms of authentication and rate limiting

(though the following extra why might not come to light unless e.g. folks from engineering cultures with more investment in logging/monitoring/observability get hired on and try to implement those things, I think it's also worth calling out...)

Why - there is insufficient per-client-ID logging/monitoring/observability, so it is unclear what is actually causing the slow client response times other than high load on some of the backing APIs at certain hours

What else did we learn?

We've also discovered that being better at understanding data flow through the system on a per-client-ID basis would provide value from a security as well as an operational perspective.

Datastores and more

Lack of boundaries invites lack of respect

It's possible attackers can damage or extract data

from unexpected parts of the system if they can compromise just

one part, since we have (as currently drawn) minimal systems-level

security protections aside from a halfhearted attempt at client rate

limiting.

Attackers (in arch version #2) can still hit any of our backing APIs directly, despite us having the intermediate API.

We also don't have any serverside data sanitization in place for either input from client(s) or output from our datastores.

Trust boundaries should go anyplace data crosses from one "system" within our system (from the user to us, from the client to the API, from the API to AWS...) to another.Exploring trust

We've settled on trusting neither the client, nor the intermediate API, at this point. Effectively, the intermediate API is the client from system version #1, and (not to overindex on rate limiting here) should be rate limited and otherwise treated just like any other client.

What's the point of all the lines?

- rate limit all possible client IDs talking to the intermediate API

- we are likely interested in adding service to service authentication of some type to our setup, along with client allowlisting, so that, for example, only the intermediate API can talk to the backing APIs, and our friend Frankie can't hit them directly anymore because they aren't allowlisted

- we want to have data sanitization in our intermediate API probably (before we pass user input on to our backing APIs at the very least), unless we choose to add it to our client

STRIDE

Spoofing

- It's possible to impersonate the intermediate API to both the client and the backing APIs, in the current setup.

- Nothing as documented prevents attackers from CSRFing our users

Tampering

- Any of our attackers could potentially figure out how to affect just parts of existing accounts, since none of our APIs have proper authentication

- There are no documented mechanisms preventing stored XSS in our current setup (leaving aside the other kinds for now since we're not really paying attention to the client so much at the moment)

Repudiation

- Frankie can just directly hit the backing APIs and the teams who own them haven't got a clue why their users are complaining. They don't have a good way to tell what client did what.

Information Disclosure

- Akash can build a curated collection of VIP account access / data using our APIs

- If someone could hit our databases directly (which... nothing in the above architecture as described says they can't), they wouldn't need to go through our APIs to create or collect account info

Denial of Service

- When Frankie makes a bunch of accounts our legit userbase experiences degraded service because the backing APIs are occupied

Elevation of Privilege

- If a user doesn't want to be rate limited and is technologically capable, they can stop going through the regular client, which is rate limited, and do just what Frankie is doing in hitting the backing APIs

- Jenna can (particularly given how underspecified the system currently is) potentially force a race condition in the three account APIs which could lead to her account gaining additional privileges it doesn't or shouldn't have currently as created through the web client, via manipulating requests to / through the intermediate API

Some other frameworks which might be interesting instead of or in addition to STRIDE: ATT&CK and NIST 800-154.

We could go further here and walk through these plus possibly others, but I think I'll leave it here for now because I'm pretty much writing a novel and hopefully have left enough points of interest for someone who didn't know much about threat modeling before to sink in some hooks and google some stuff and follow some links (I hope it's a fun discovery process, too!!!).

But there's one more step I'd like to do - relating what we think could happen in the system, back to the system - checking our work. I never see anyone doing this with threat modeling and I think it's as useful here as it is in math or CS theory proofs.

Checking our work

Let's consider some of the statements from STRIDE first. We're going to include / reference 'data' here based on some of the colour we've already added to this system and engineering organisation previously.

Jenna can (particularly given how underspecified the system currently is) potentially force a race condition in the three account APIs which could lead to her account gaining additional privileges it doesn't or shouldn't have currently as created through the web client, via manipulating requests to / through the intermediate API.

Why - the interactions between the three APIs hitting the same data store are not clearly specified.

Why - in the architectural diagrams we drew / spent a bunch of time drawing red dotted lines on, it's become clear that we don't have a good model for which parts of the account data structure should be accessed in what order

Why - the APIs organically grew out of one API which did all the actions, as folks exploded a monolithic service into microservices perhaps, but the data interactions were not also split out so that we could have a one to one relationship between API action and data store

Why - no technical design was followed in making this refactor, or it could have been more clear to everyone involved that there are direct dependencies between the types of data the APIs access in the data store

So, now we have justification for changes we might make to the system, and also some potential process improvements we can make as well!

Let's do this for one more, and then maybe call it the end of this post since you likely get the idea...

Any of our attackers could potentially figure out how to affect just parts of existing accounts, since none of our APIs have proper authentication

Why - we didn't understand the risks involved in only authenticating a single client ID

Why - we already have user-level / account-level authentication, and we thought that would be sufficient to protect user information we store

Why - we aren't subject to things like SOX (let's say $company isn't public) and we don't store credit card data, we use a third party vendor who meets PCI DSS level 1 already, so we don't have to undergo regular third party pentests. We could do GDPR-focused pentesting, but we don't currently

Why - we assume if we aren't seeing much go wrong in our observability tooling (we don't have IDS or SIEM tooling), everything is fine, and if anything goes wrong it's a problem caused by legitimate users

Why - $company do not have enough folks with security experience to provide the partnership necessary at every stage of the SDLC to prevent building a system like this

Conclusion

Applying somewhat overlapping threat modeling techniques can help us to catch some information we might not otherwise catch, threat modeling as the system evolves helps us understand it better, and we can even use hacks built on techniques we might be more familiar with to help gather enough data to be able to approach less familiar techniques.

We haven't gotten into "part by part, can we say this system does or does not meet certain security properties" vs as a whole, but perhaps that's better left for a follow-on.